February 2026

Custom Interfaces for Chat Agents

February 4th, 2026

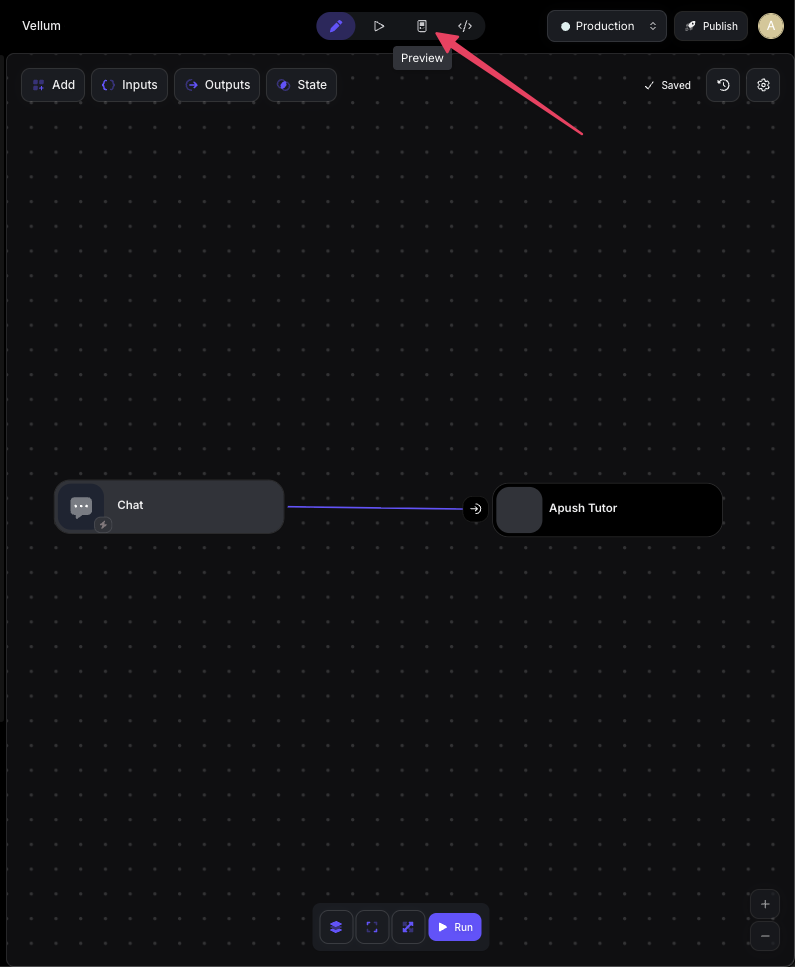

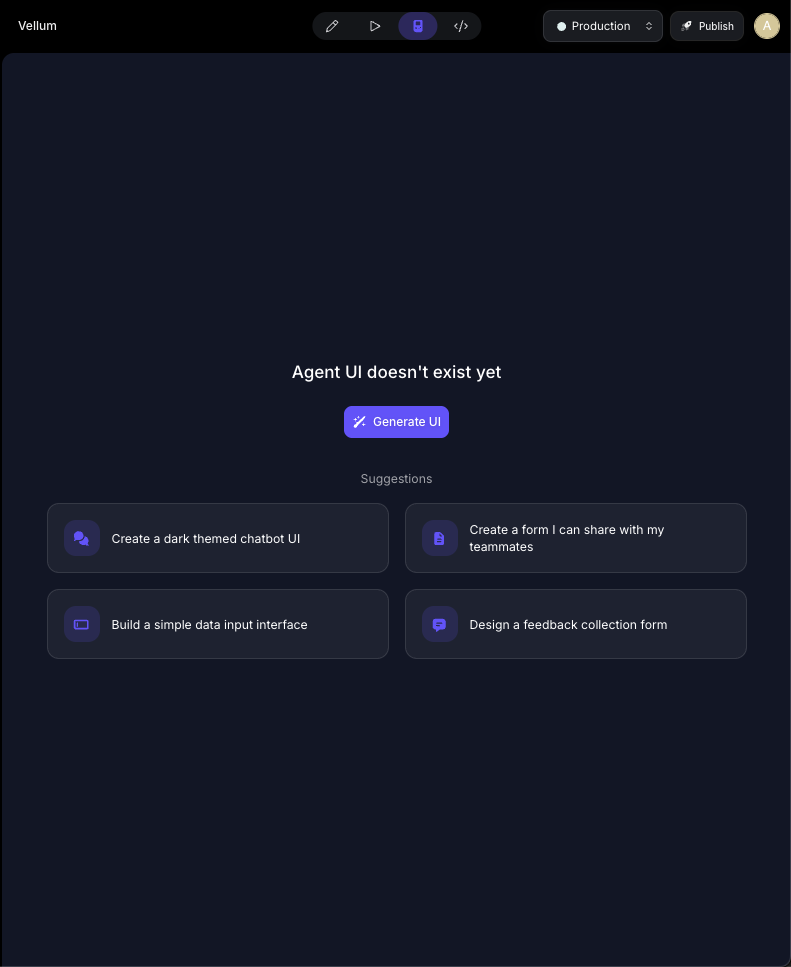

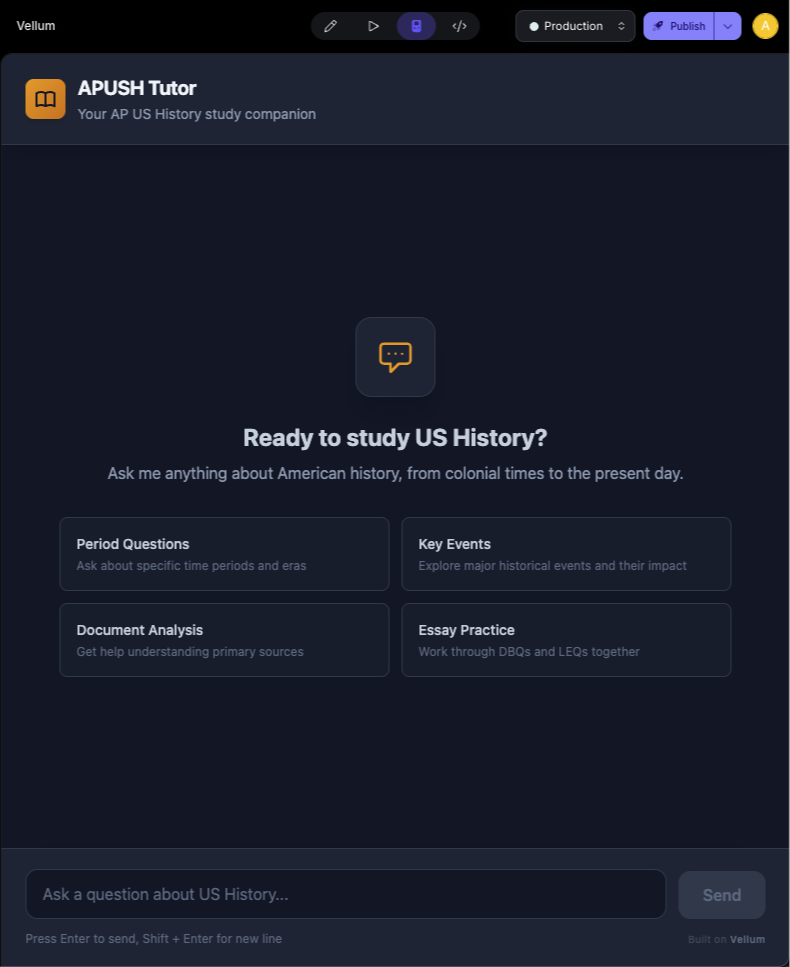

You can now generate custom interfaces for your Chat Agents. Access the new “Preview” mode from the Workflow Sandbox toolbar and build your interface by chatting with Vellum. The interface code is versioned and deployed alongside your Workflow, so publishing your Chat Agent also publishes its interface. You can update the interface independently of the Workflow.

Microsoft Teams Integration

February 3rd, 2026

Microsoft Teams is now available as a native integration. Connect your Microsoft Teams workspace to Vellum and use it in your Workflows—send messages, post to channels, and more through Agent Nodes or Custom Nodes.

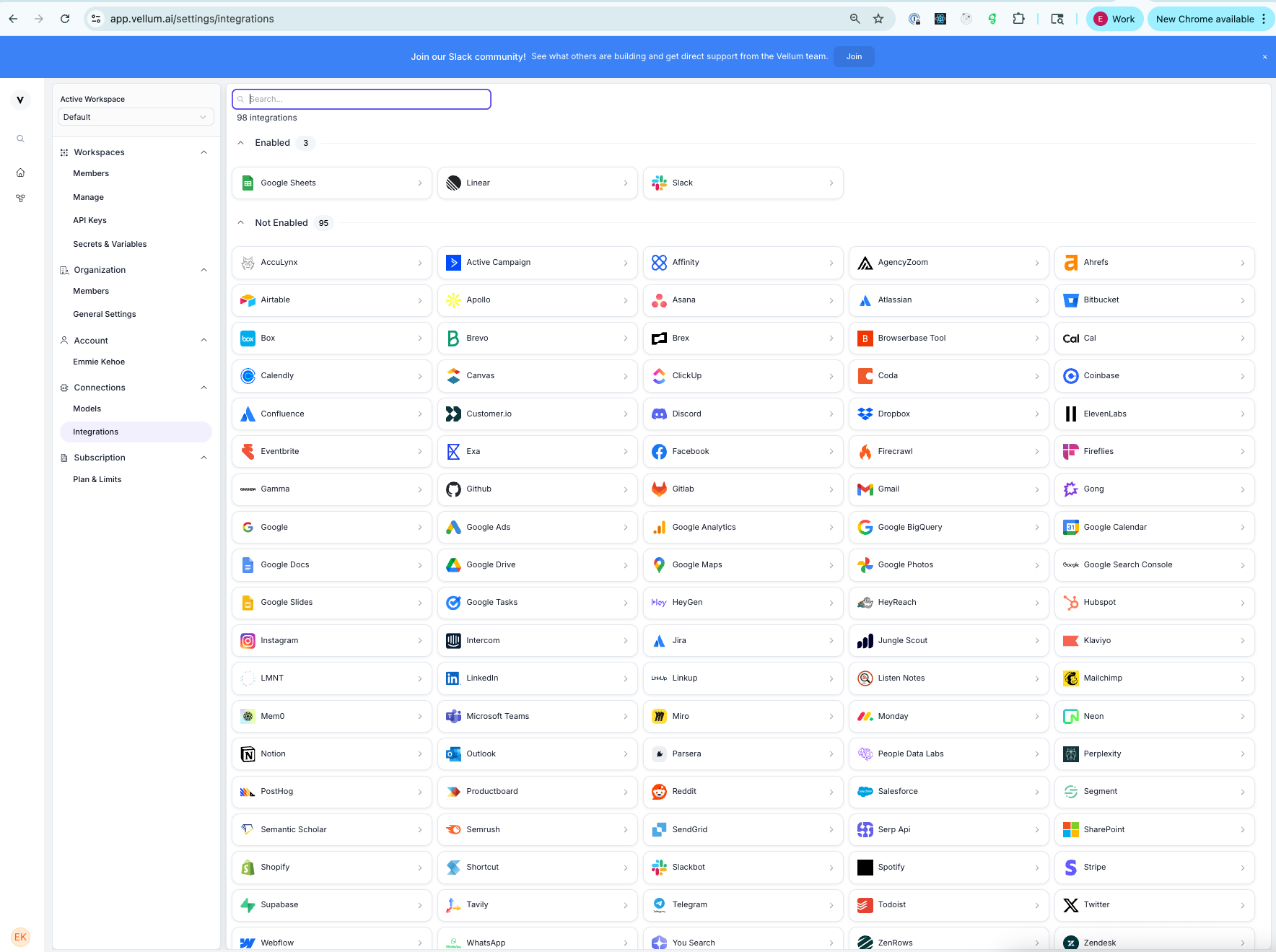

Native Integrations Grouping

February 3rd, 2026

The Integrations page now groups native integrations by Enabled and Disabled, making it easier to see which ones are ready to use with your Agents.

Message Queueing

February 2nd, 2026

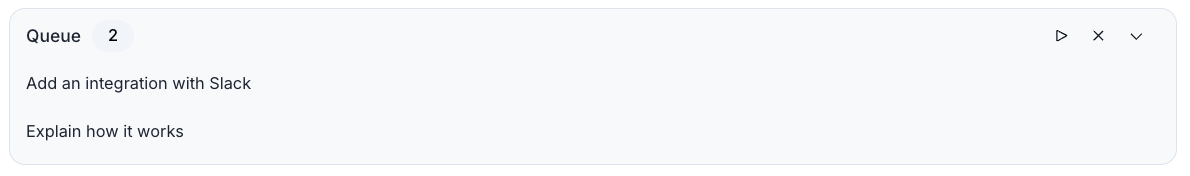

Agent Builder now supports message queueing during response generation. Send multiple instructions in advance, pause sending them, and let them run automatically once the current response finishes.