July 2025

Support for BaseTen via Vellum

July 31st, 2025

We’ve added support for BaseTen as a Model Host and along with it, the following BaseTen models have been implemented:

- Deepseek R1 0528

- Deepseek V3 0324

- Llama 4 Maverick

- Llama 4 Scout

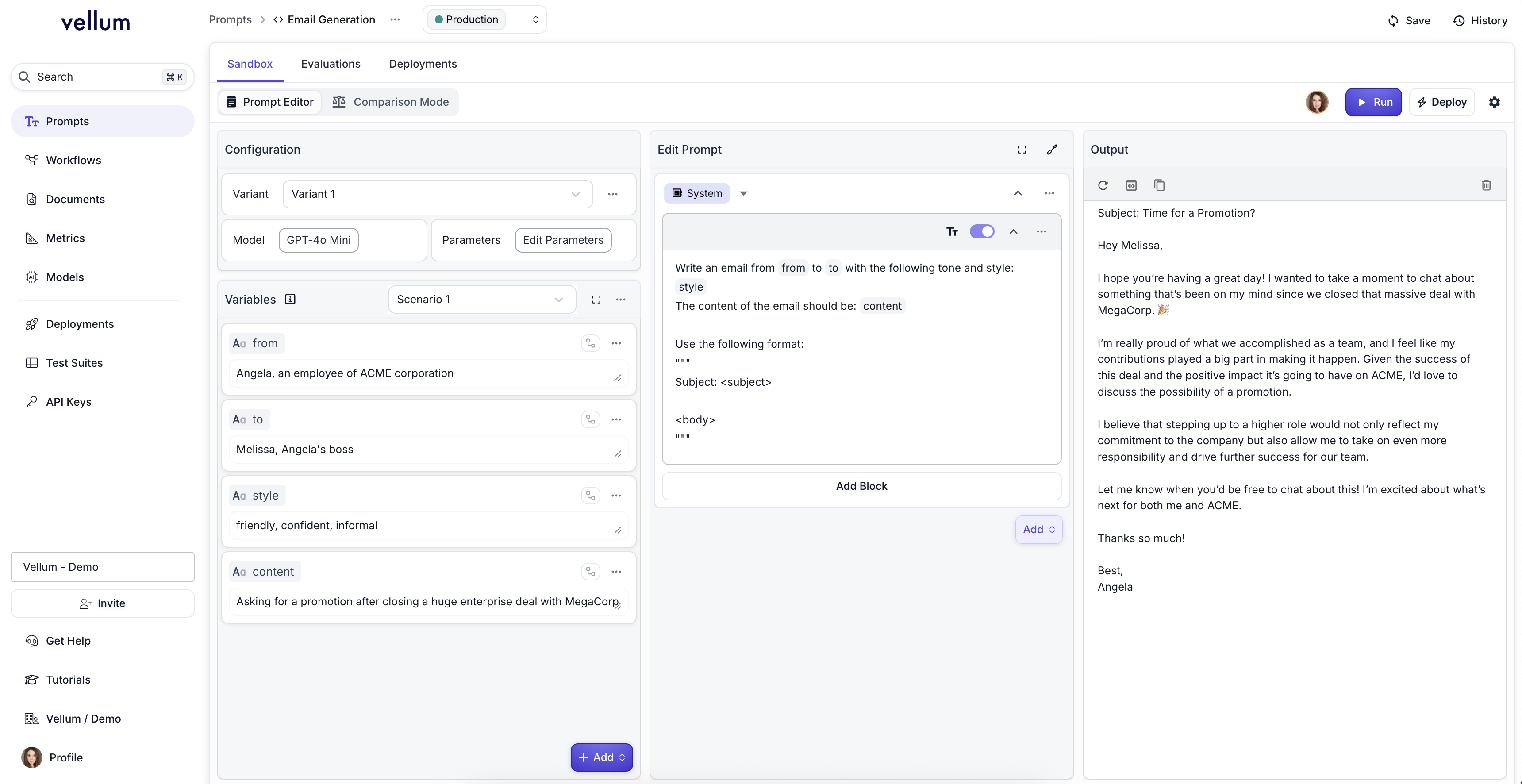

Prompt Editor Layout Update

July 31st, 2025

We’ve redesigned the Prompt Editor layout from 2 columns to 3 for a more focused editing experience. Our hope is that this update helps you get into a flow state while prompting.

The new 3-column layout separates configuration, prompt editing, and output clearly:

- Left Column: Configuration & Variables

- Center Column: Prompt Editor

- Right Column: Output panel

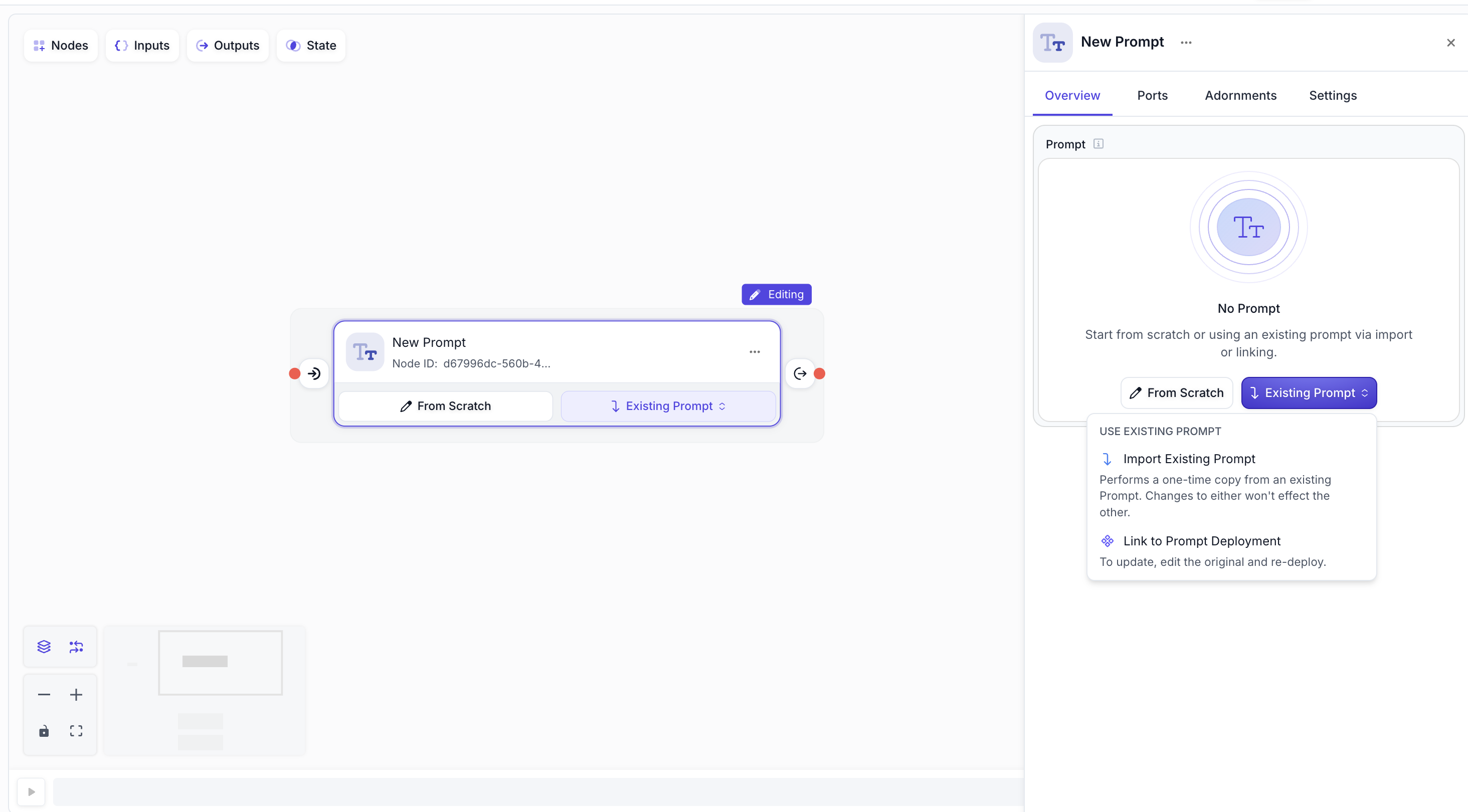

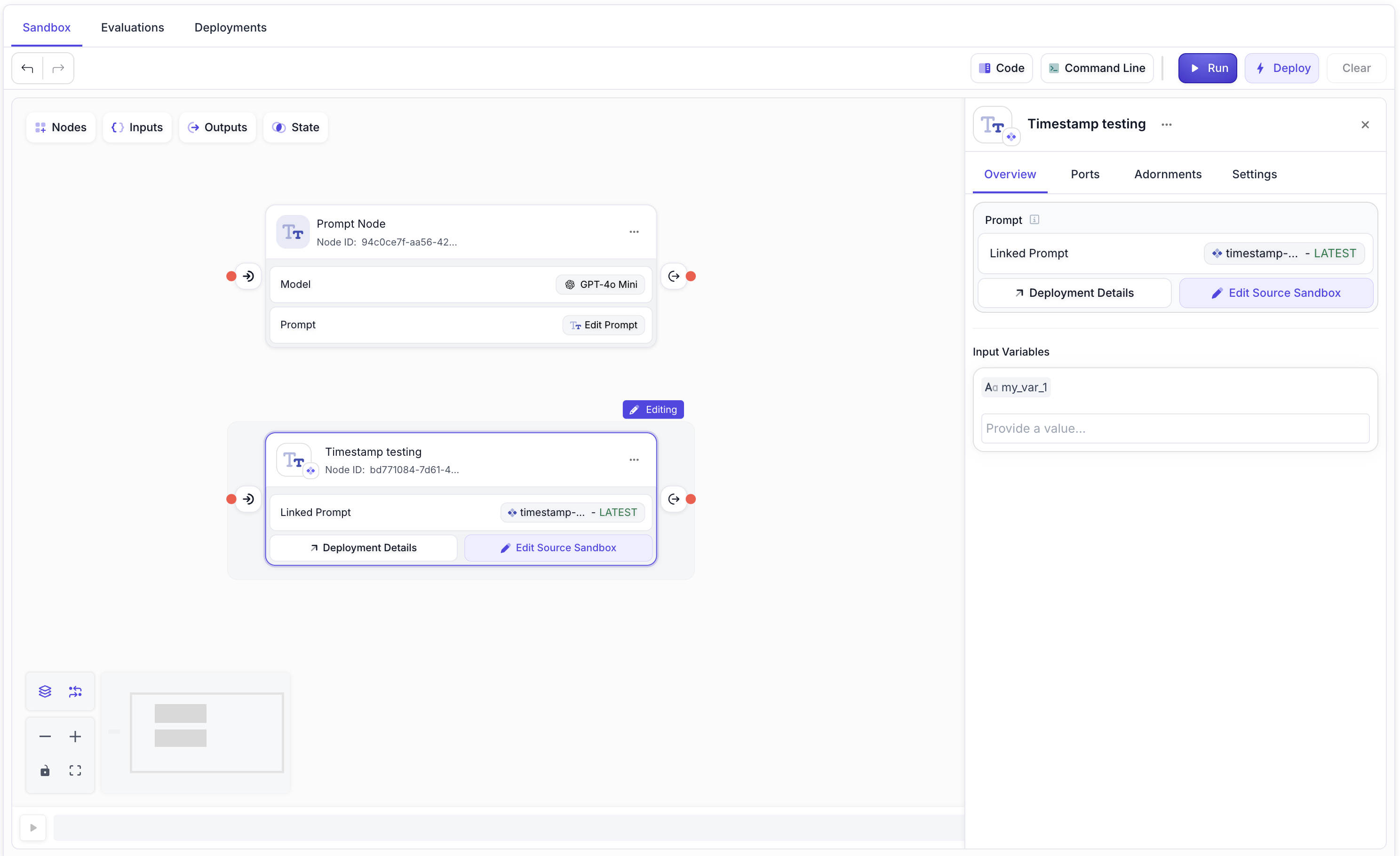

Prompt Node Unification

July 24th, 2025

We’ve simplified the Prompt Node creation experience by unifying the starting point for how all types of Prompt nodes are created. Previously, we had two separate variants of Prompt Nodes: an Inline Variant for creating new prompts from scratch and a Deployment Variant for linking to existing Prompt Deployments.

Now, both functionalities still exist, but they share the same intuitive starting point. This makes it much easier to discover and choose between the three available options:

- From Scratch: Create a new prompt directly within the workflow (useful if the Prompt is used only by this Workflow)

- Import Existing Prompt: Perform a one-time copy from an existing Prompt in your Workspace (useful if you original defined the Prompt in isolation, but now want to use it in a Workflow)

- Link to Prompt Deployment: Connect to a specific Release Tag of a Prompt Deployment (useful if you want to share a Prompt between multiple Workflows and update it centrally)

As part of this update, we’ve also enhanced the UI for both the Prompt Node itself and its Editor Side Panel to display more helpful information. When you link to a Prompt Deployment, you’ll now see the selected model and high-level details about the linked prompt, including the Deployment name and Release Tag.

We hope that these improvements make it easier to reuse Prompts throughout Vellum and navigate their dependencies. Let us know if you have any feedback!

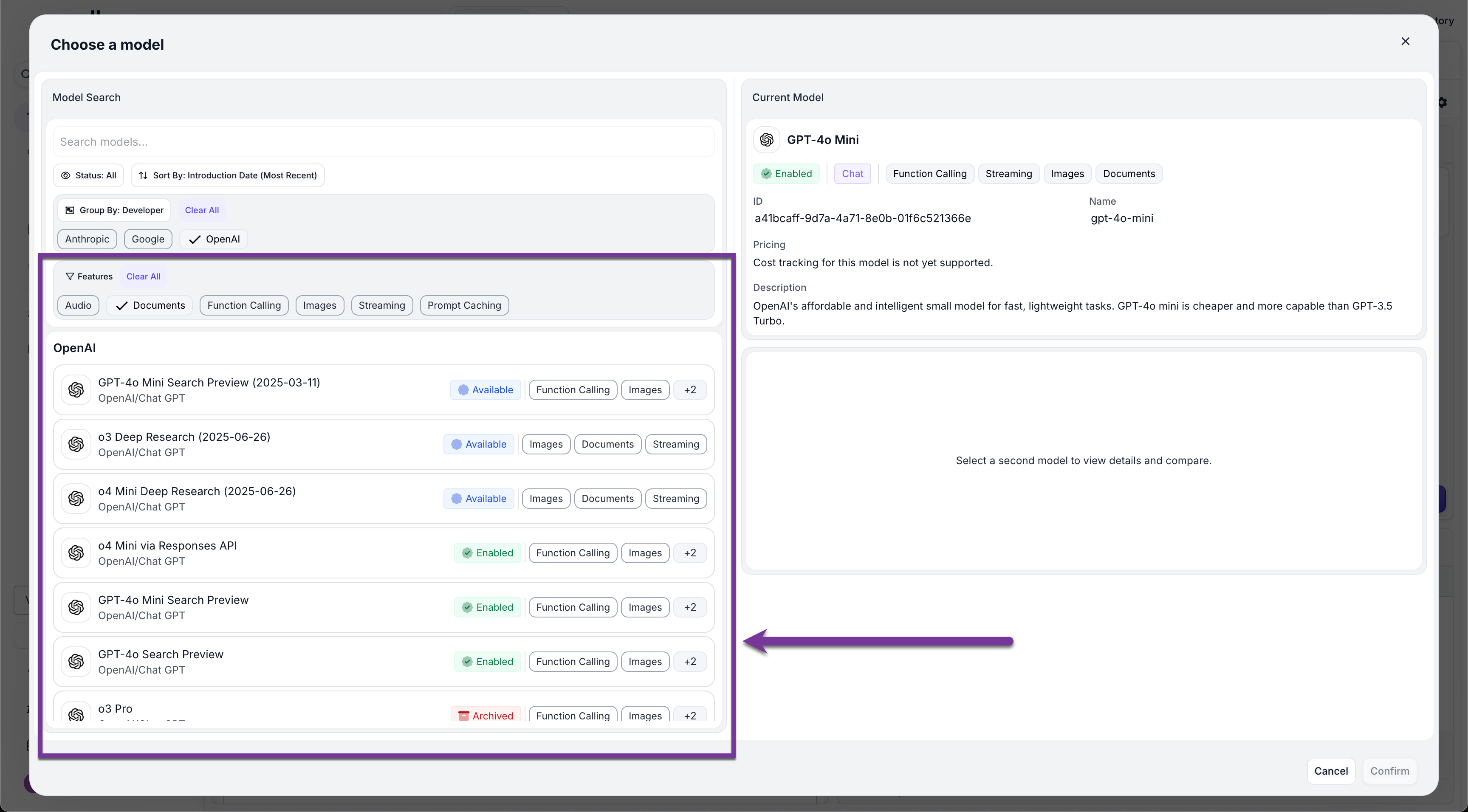

Model Picker Improvements

July 24th, 2025

We’ve made a number of improvements to the Model Picker with the goal of making it easier to find the best LLM for the job.

To start, we’ve changed the default sort order to order shown models from newest to oldest, by introduction date. This lets you quickly find and try out new models as they become available.

The bigger change though is the new “Features” filter. Often, when looking for a model, you might want to narrow in your search to those that support specific functionalities. For example, those that support Documents as inputs, or those that support Function Calling. You can now do so by selecting those Features that you want to filter on.

We’ve also cleaned up the Model option cards to highlight those features that the model supports so that you can see this at a glance, rather than needing to click into each one at a time.

Thinking Output in Prompt Nodes and Prompt Deployment Nodes

July 21st, 2025

Building on our Reasoning Outputs in Prompt Sandboxes, we’re excited to extend thinking output support to Prompt Nodes and Prompt Deployment Nodes within the Workflows Sandbox. This enhancement brings the same transparency and insight capabilities directly into your workflow development process.

When a Prompt Node or Prompt Deployment Node executes with a model that supports reasoning outputs, you can now view the model’s thinking process directly in the node’s results view. This provides valuable debugging and development insights, allowing you to understand how the model arrives at its conclusions within your workflow context.

Important: While the thinking output is visible for your review and debugging purposes, downstream nodes in your workflow will only receive the final output - the thinking process remains isolated to the results view. This ensures your workflow logic remains unaffected while providing you with valuable insight into the model’s reasoning process.

Like other reasoning output features, this requires API Version 2025-07-30 to access the thinking capabilities.

API Versioning in Workflows Sandbox

July 21st, 2025

Building on our API Versioning functionality introduced earlier this month, we’re excited to extend this capability to our Workflows Sandbox. You can now specify which version of the Vellum API you want to use when testing and developing your workflows, giving you the same level of control over new features and breaking changes in your workflow development environment.

This enhancement ensures consistency between your workflow development and production environments, allowing you to test new API features before deploying them to production. The API version selector in the Workflows Sandbox works the same way as our other API versioning implementations - simply choose your desired API version to access the corresponding feature set.

This addition complements the existing API versioning support across our platform, providing a unified experience whether you’re working with Prompt Deployments, Workflow Deployments, or developing in the Workflows Sandbox.

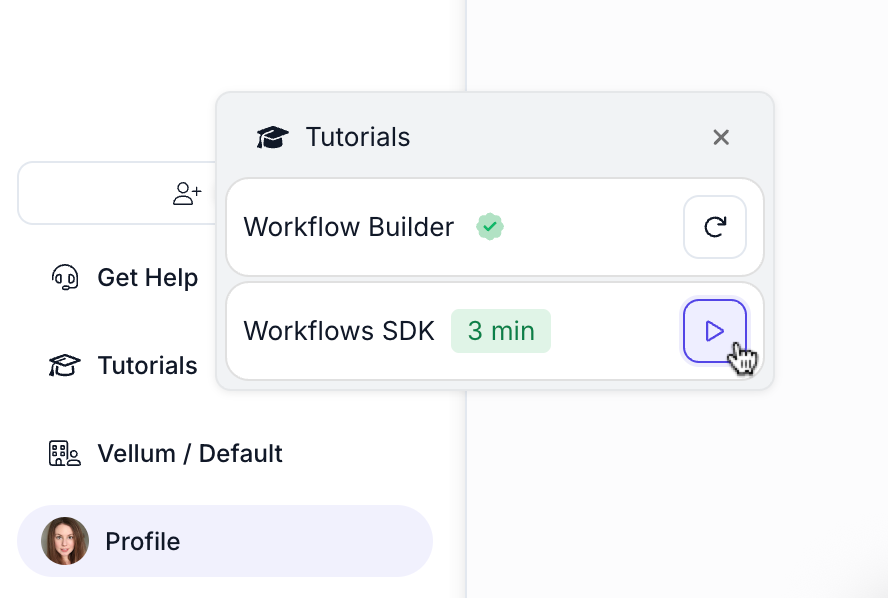

Tutorials Launcher

July 21st, 2025

A new Tutorials menu item is now available in the sidebar, giving you quick access to interactive walkthroughs designed to help you get started in Vellum and explore key features.

The Tutorials Launcher provides structured learning paths to help both new and experienced users discover Vellum’s capabilities through hands-on guidance.

API Node Timeout Configuration

July 21st, 2025

A timeout can now be set on the workflow API Node. This allows users to configure the maximum number of seconds they want to allow for the API request to complete, preventing workflows from hanging indefinitely on slow or unresponsive external services.

The timeout setting is configurable in the API Node settings panel and helps ensure reliable workflow execution by providing a clear upper bound on request duration.

Model Page Performance and Search Improvements

July 18th, 2025

The Models page in Vellum is now faster to load and the page’s search bar more reliably finds relevant models based on search queries.

We have a number of exciting improvements coming soon that’ll make it easier to find the right LLM for the job. Stay tuned!

New Workflow Execution API

July 17th, 2025

We are now introducing a new API endpoint for retrieving specific Workflow Execution details. This new endpoint allows you to fetch detailed information about any specific Workflow Execution using its execution ID.

The new endpoint is available at:

This API provides comprehensive details about a workflow execution, including:

- Execution status and metadata

- Input parameters and output results

- Node-level execution details and timing

- Error information if the execution failed

- Cost and usage tracking data

For more information, see the API documentation.

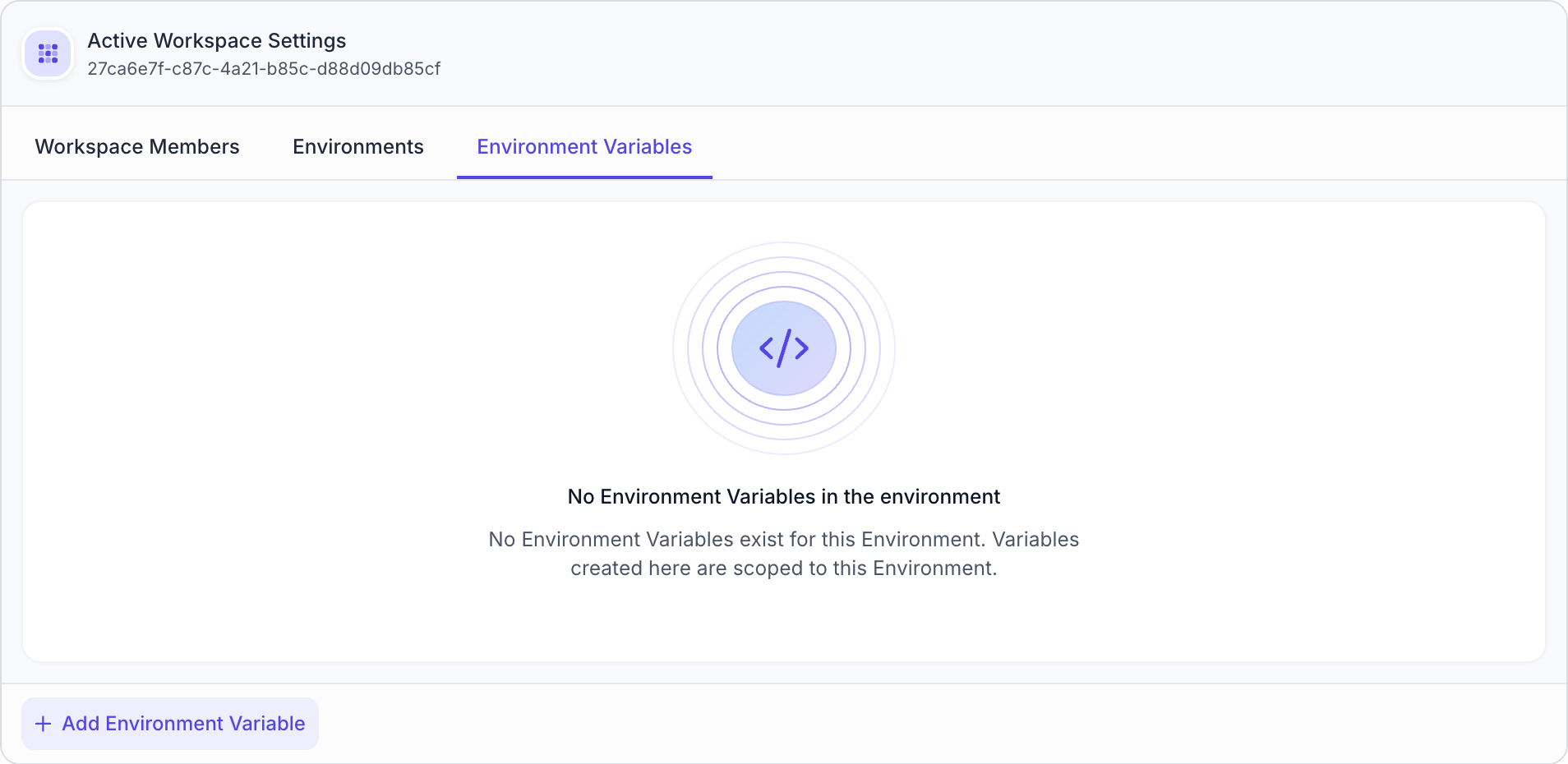

Environment Variables

July 17th, 2025

Right off the heels of the introduction of Environments is Environment Variables! Environment Variables allow you to configure variables whose values are different based on the Environment in which they’re used.

For example, you might have an API Node in a Vellum Workflow that connects to the Firecrawl API and you want to use one Firecrawl API key when the Workflow is run within your Development Environment and a different Firecrawl API key when the Workflow is run in Production.

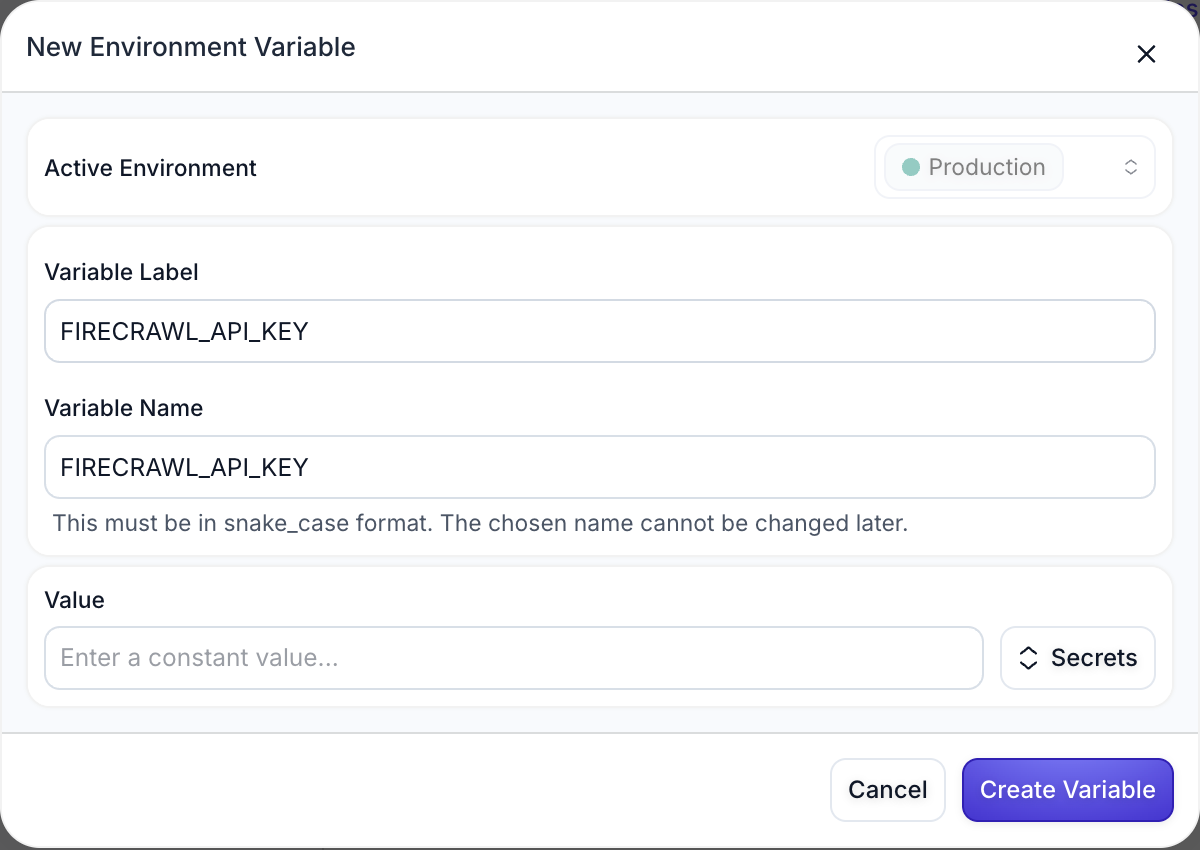

Creating Environment Variables

To create Environment Variables, navigate to the Environment Variables tab within Workspace Settings and define a new Environment Variable with a descriptive name (e.g., FIRECRAWL_API_KEY).

Configuring Values Per Environment

You can then specify the variable’s value within each Environment. The value can be a constant string, which is good for values that aren’t sensitive, or can reference a Secret, which is more common and is used for sensitive values like API keys.

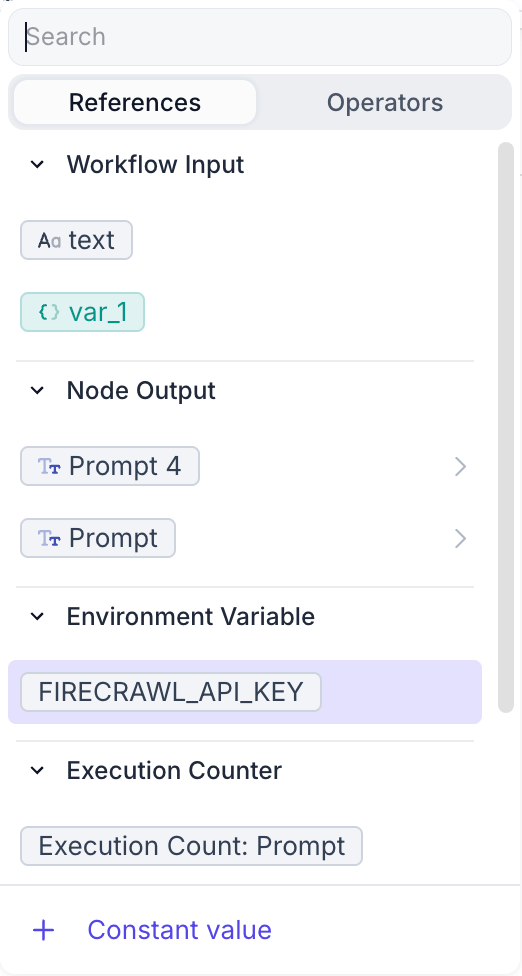

Using in Workflows

Once defined, the Environment Variable can be referenced as a Node input in Workflows. Its value is determined by whichever Environment you have active.

This feature makes it much easier to manage configuration differences across your development pipeline while maintaining security best practices for sensitive values.

For more details, see the updated Environments documentation.

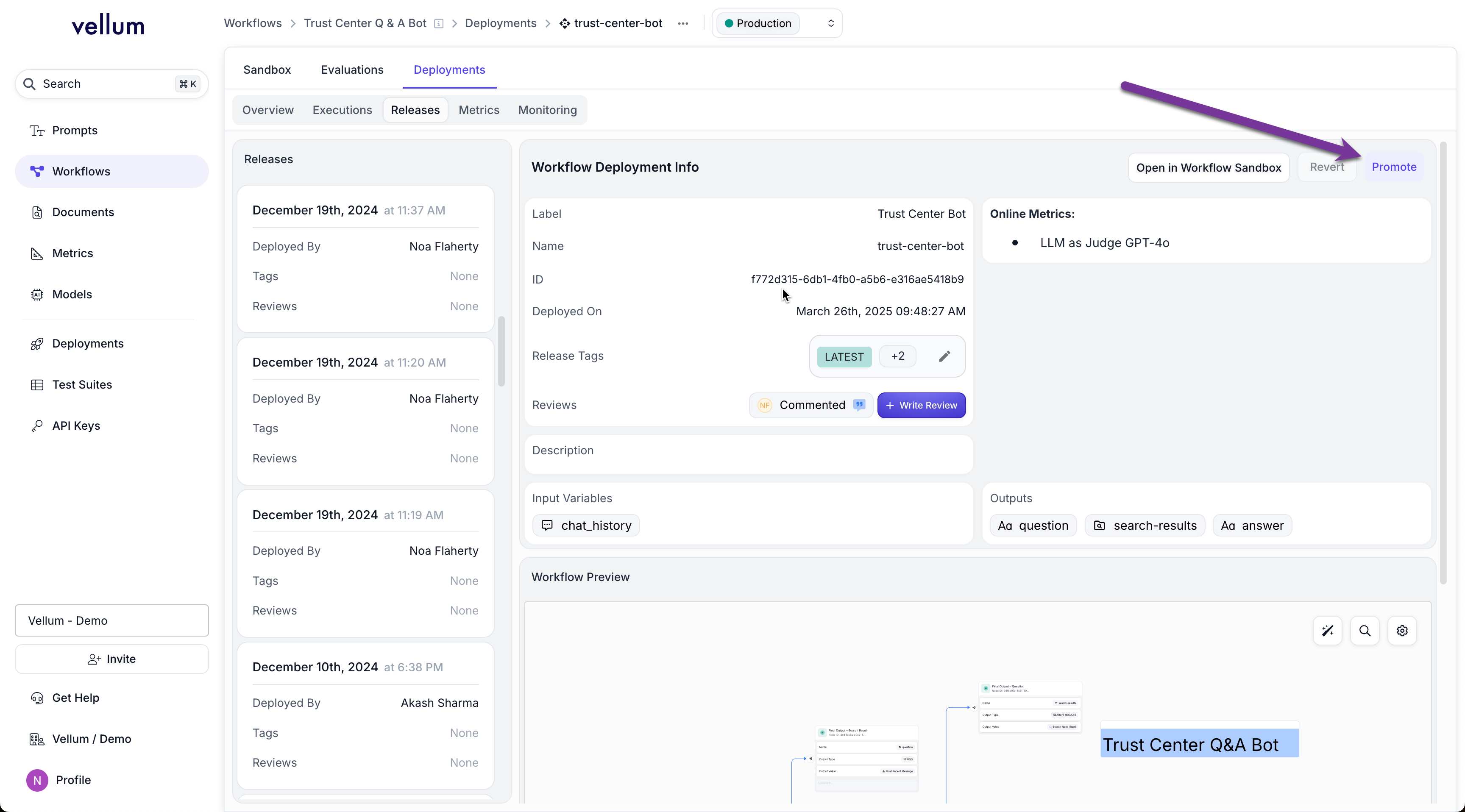

Promoting a Release to Another Environment

July 16th, 2025

You can now promote a Release from one Environment to another, streamlining your deployment workflow. After deploying a Prompt or Workflow to one Environment (e.g., “Development”) and completing your testing and QA, you can easily promote that Release to another Environment (e.g., “Production”).

To promote a Release, navigate to the Release you want to promote and click the “Promote” button.

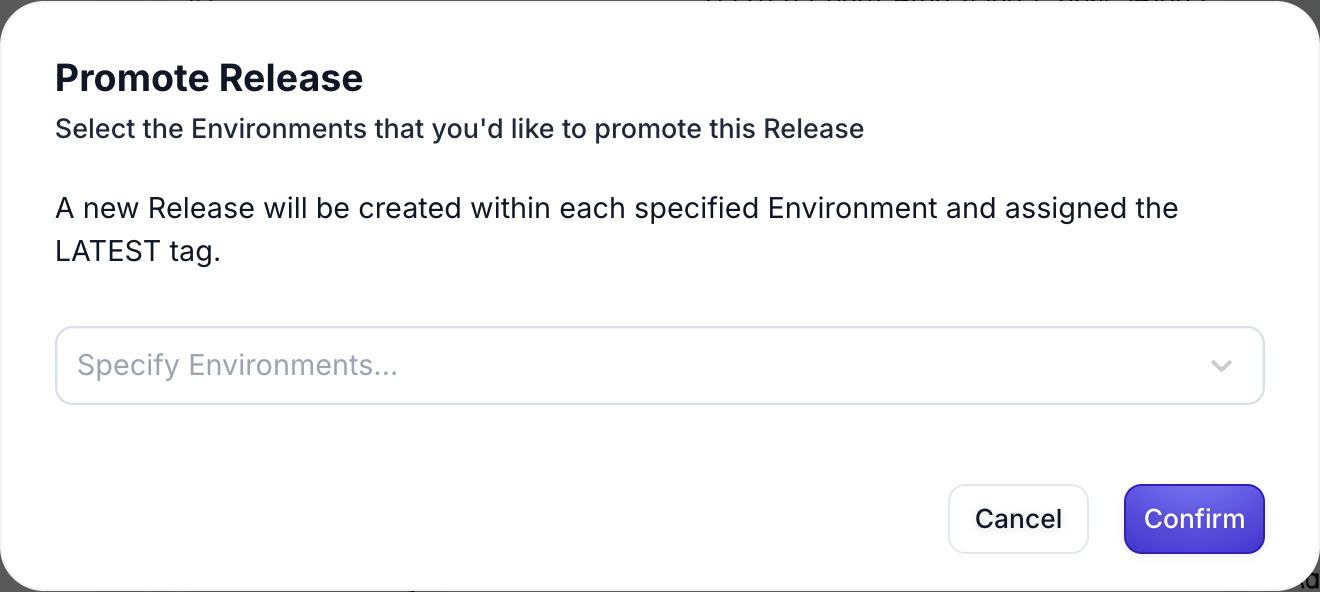

This opens a modal where you can select which Environment(s) you want to promote that Release to.

Upon promotion, you’ll find a new Release in the Release History for each of the Environments that you had selected, making it easy to track deployment history across your different environments.

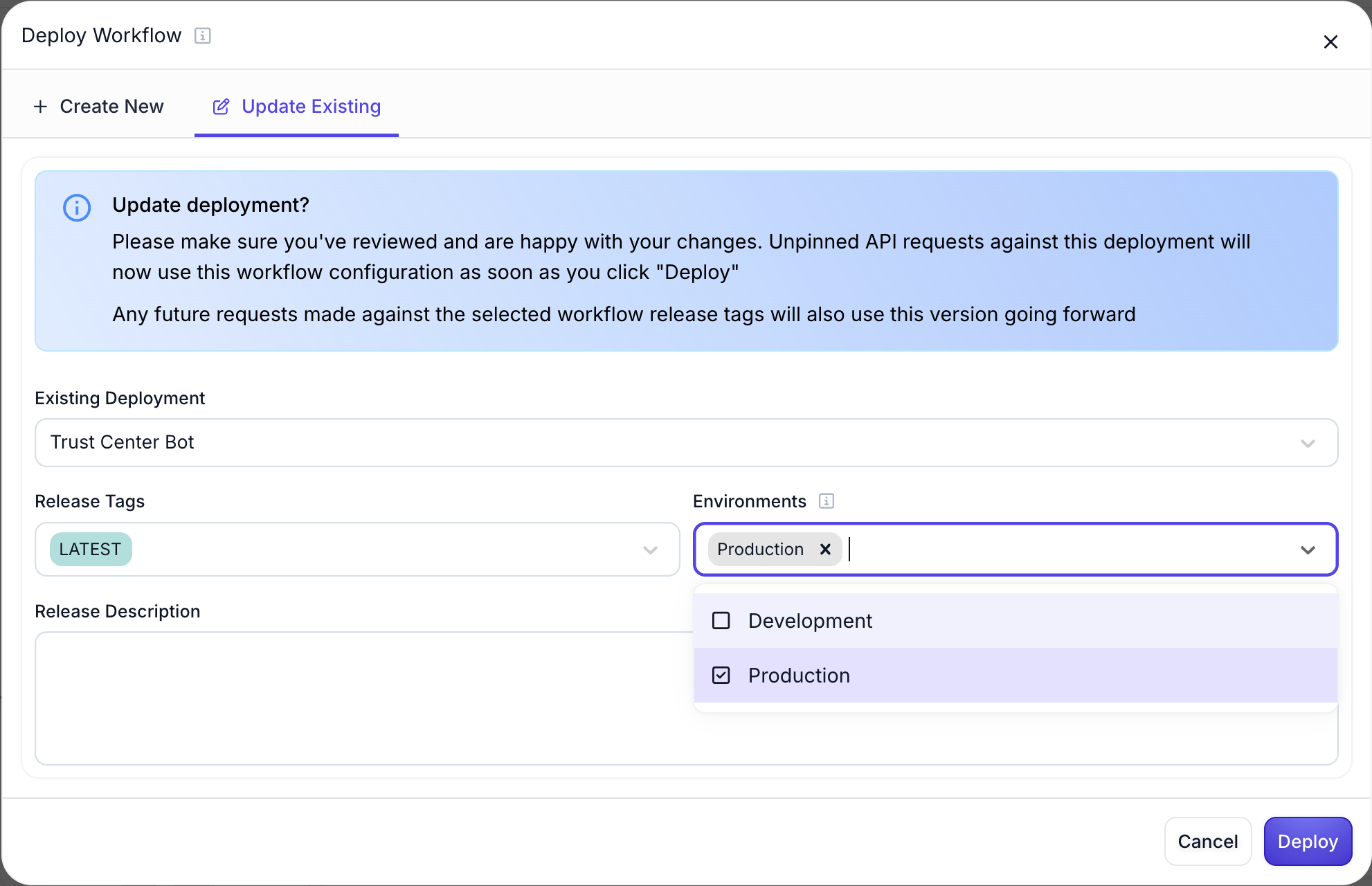

Deploying to Multiple Environments

July 16th, 2025

When you deploy a Prompt or Workflow, you can now choose not only your currently active Environment to deploy to, but you can also opt in to deploy to additional Environments at the same time. This is useful if you want to push an update to say, Development and Staging at the same time, rather than deploying to one, switching your active Environment, then deploying to the other.

This enhancement streamlines your deployment process by reducing the number of steps needed to deploy across multiple environments, making it easier to maintain consistency across your development pipeline.

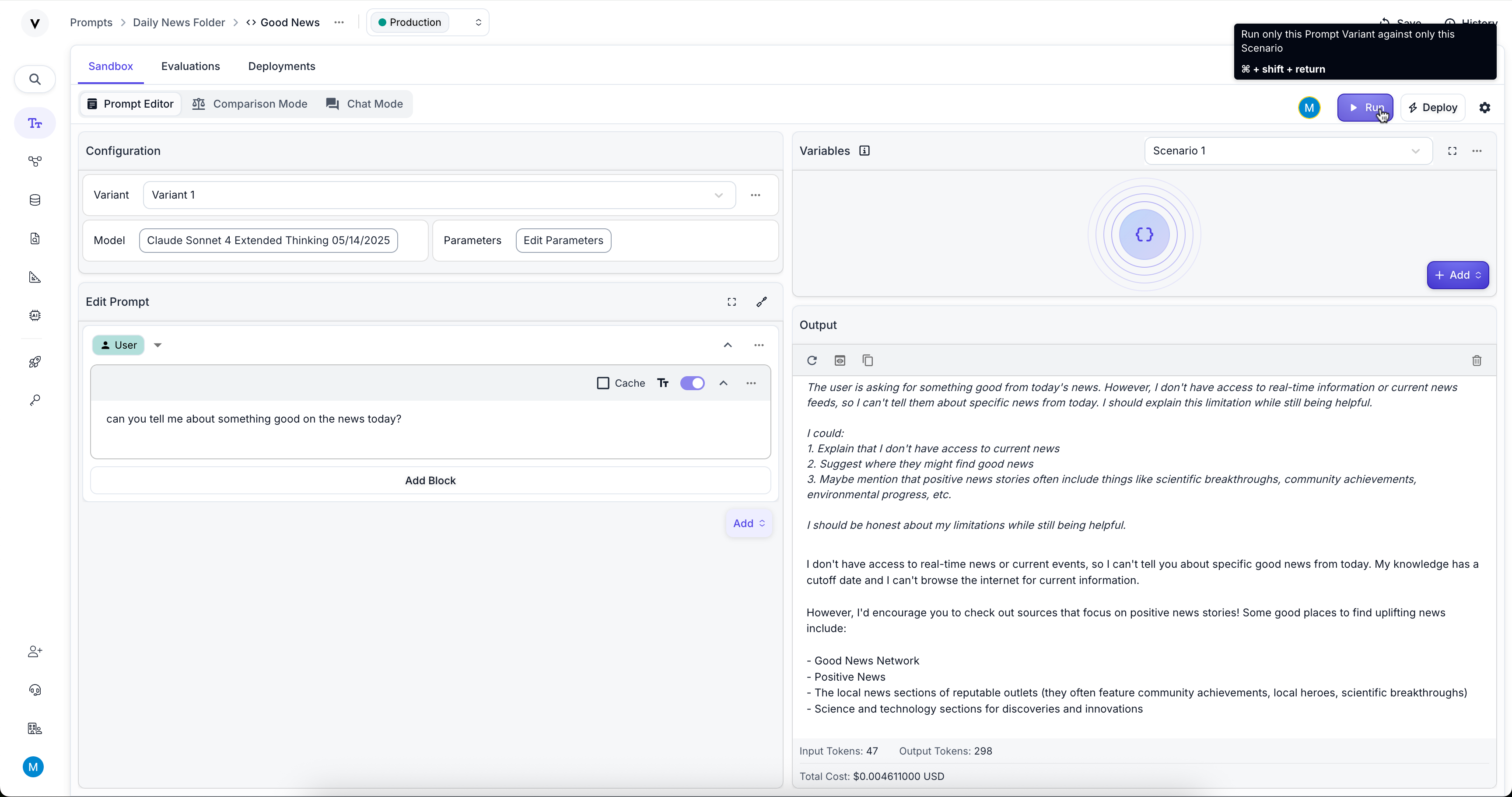

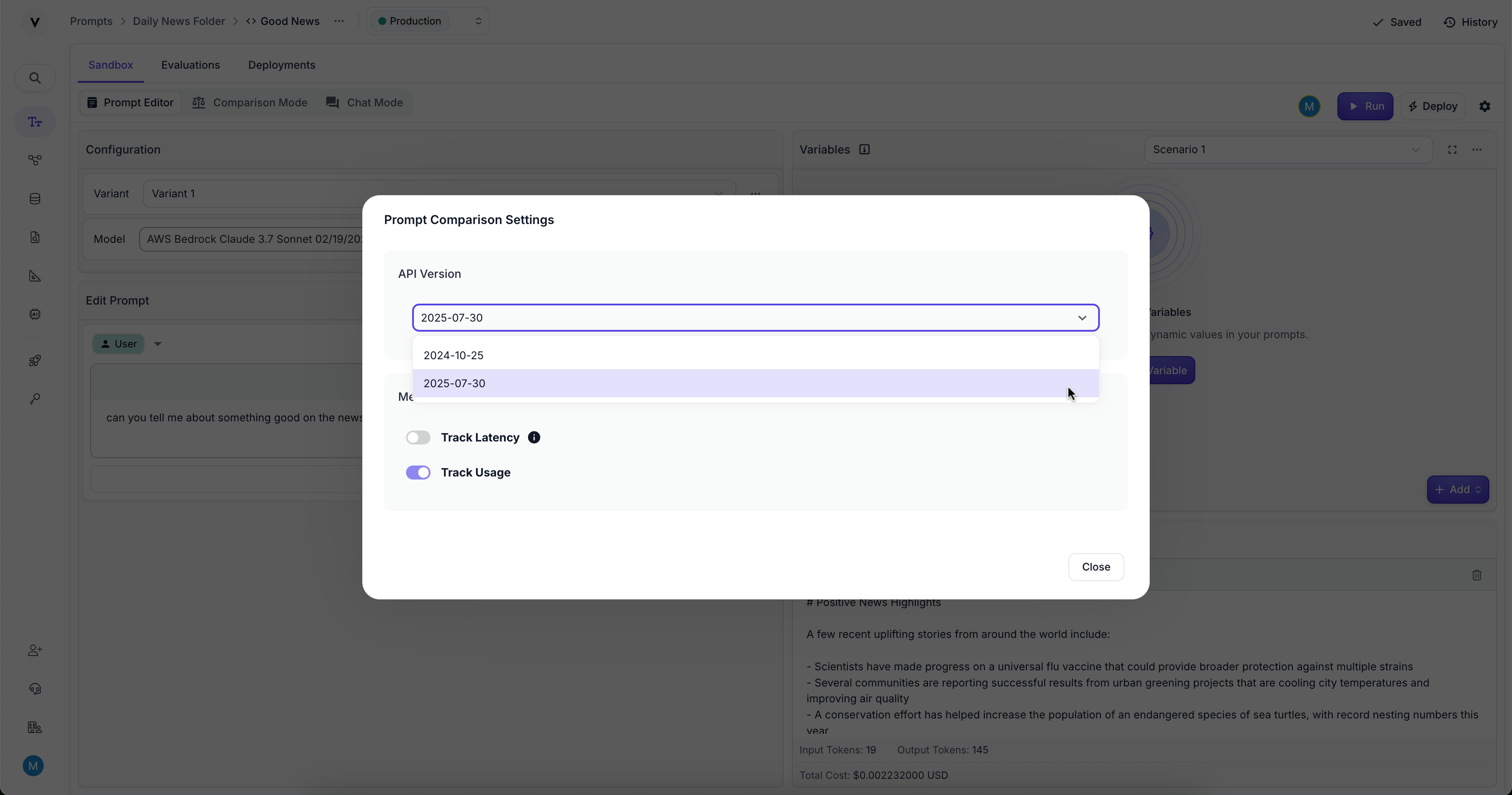

Reasoning Outputs in Prompt Sandboxes

July 15th, 2025

First-class Reasoning Outputs are now supported within the Prompt Sandbox. This new feature allows you to see the reasoning behind a model’s response, providing greater transparency and insight into how the model arrived at its conclusions.

Given that Reasoning Outputs are a new feature enabled by the latest Vellum API Version, you’ll need to specify API Version 2025-07-30 in the Prompt Sandbox Editor Settings.

API Versioning

July 15th, 2025

We’re excited to introduce the concept of API Versions, which allow you to specify which version of the Vellum API you want to use. This gives you control over when to enable new API features that may introduce breaking changes that you have to account for.

You can specify which version of Vellum’s API you’d like to use by providing a X-API-VERSION header as part of your request. For example:

The Vellum API has two versions today:

2024-10-25– the default version, which is used if noX-API-VERSIONheader is provided, and is used by all versions of our SDKs prior to1.0.0; and2025-07-30– the latest version, which includes new features and improvements.

The first new feature to go live with our our latest API Version 2025-07-30 is Reasoning Output support for Prompt Deployments. If a model supports thinking/reasoning blocks, then they’ll come through as differentiated blocks in the Vellum API response.

For additional details on API Versioning in Vellum, check out our API Version documentation.

Support for Grok 4 via Vellum

July 14th, 2025

Vellum now supports xAI’s latest model Grok 4.

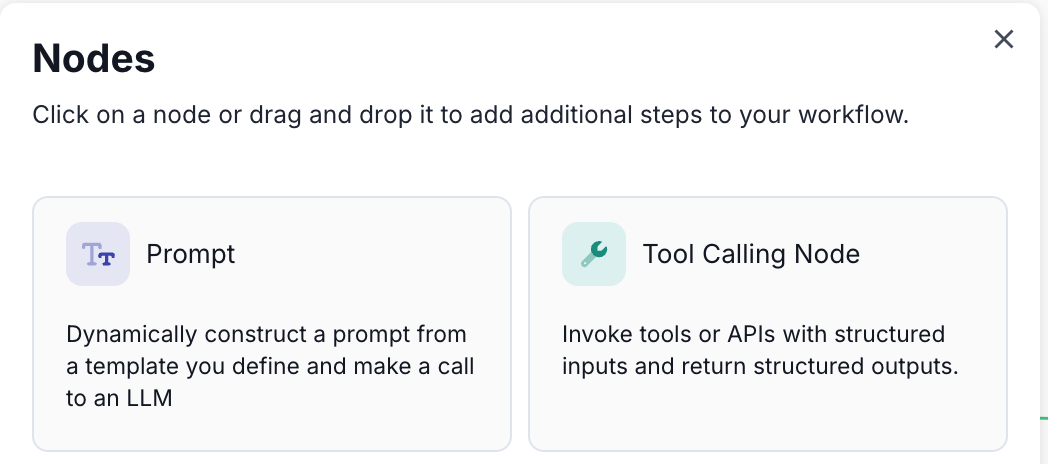

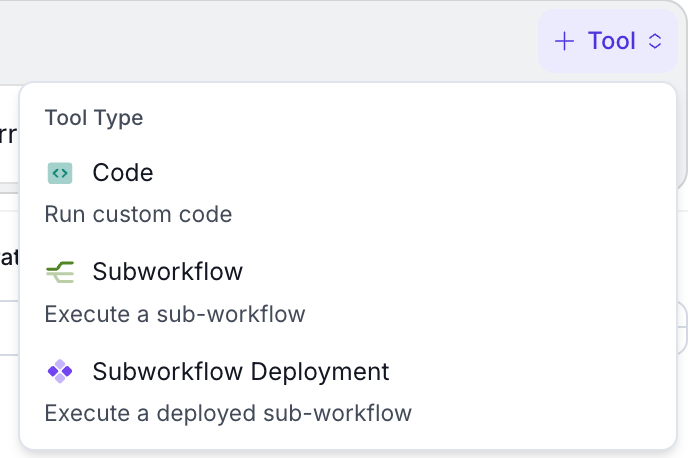

Tool Calling Node

July 13th, 2025

We’re excited to introduce the Tool Calling Node, a new Workflow Node that dramatically simplifies function calling within Vellum Workflows. Previously, implementing function calling required manually defining OpenAPI schemas, handling complex loop logic, and parsing function call outputs - a tedious and error-prone process.

The initial release supports three types of tools:

- Raw Code Execution: Execute custom Python or TypeScript code with automatic schema inference

- Inline Subworkflows: Call other workflows defined inline for modular design

- Subworkflow Deployments: Execute deployed subworkflows with proper version control

The Tool Calling Node provides two accessible outputs for downstream nodes:

chat_history: The accumulated list of messages managed during execution, including all function calls and responsestext: The final string output from the model after all tool calling iterations are complete

The node automatically infers the schema needed for each tool type and keeps calling the prompt until a final text output is generated, making function calling Workflows much more straightforward to build and maintain.

For detailed documentation, see Tool Calling Node.

Environments

July 13th, 2025

Environments are now a first-class concept in Vellum and are used to manage different deployment stages (Development, Staging, Production). This major update replaces the previous approach of using multiple deployments or release tags for environment differentiation.

Key Features

Environment-Scoped Resources: All Workspaces begin with a single “Production” Environment, and you can create additional Environments as needed. The new Environment picker appears at the top of most pages throughout Vellum, representing your active Environment context.

Environment-Scoped API Keys: API Keys are now scoped to specific Environments. When you call an API, it performs actions within the context of the Environment from which the API key was created. Vellum API Keys are now managed under your Workspace Settings.

Separate Release History: Each Deployment now maintains separate Release history per Environment. When you deploy a Prompt or Workflow, you can choose which Environment(s) to deploy to, creating a new Release for each selected Environment.

Environment-Specific Document Indexes: Documents uploaded to a Document Index are now Environment-scoped. Documents will only appear in search results when the search request is performed within the same Environment context.

Environment-Filtered Monitoring: Execution and monitoring data in Prompt/Workflow Deployments are now filtered to show only requests made within the active Environment context.

Migration Impact

This update fundamentally changes how you manage deployments across environments:

- Create Environments: Create new Environments as needed (e.g., Development, Staging) to isolate your development and production workflows.

- Recommended Approach: Prefer to have a single Deployment per Sandbox. Use Release Tags for version management (e.g., semantic versioning).

- API Key Management: Maintain separate API keys for each Environment to ensure proper isolation.

- Document Management: Remember that when a Document is uploaded, it’s only available within the Environment it was uploaded to. If you need the same Document in multiple Environments, you’ll need to upload it separately in each Environment.

For more details, see the updated documentation on deployment lifecycle management and environments.

This is just the start of our Environments journey! We have many more features planned to enhance your deployment management experience, including Environment Variables and Environment-level permissions.

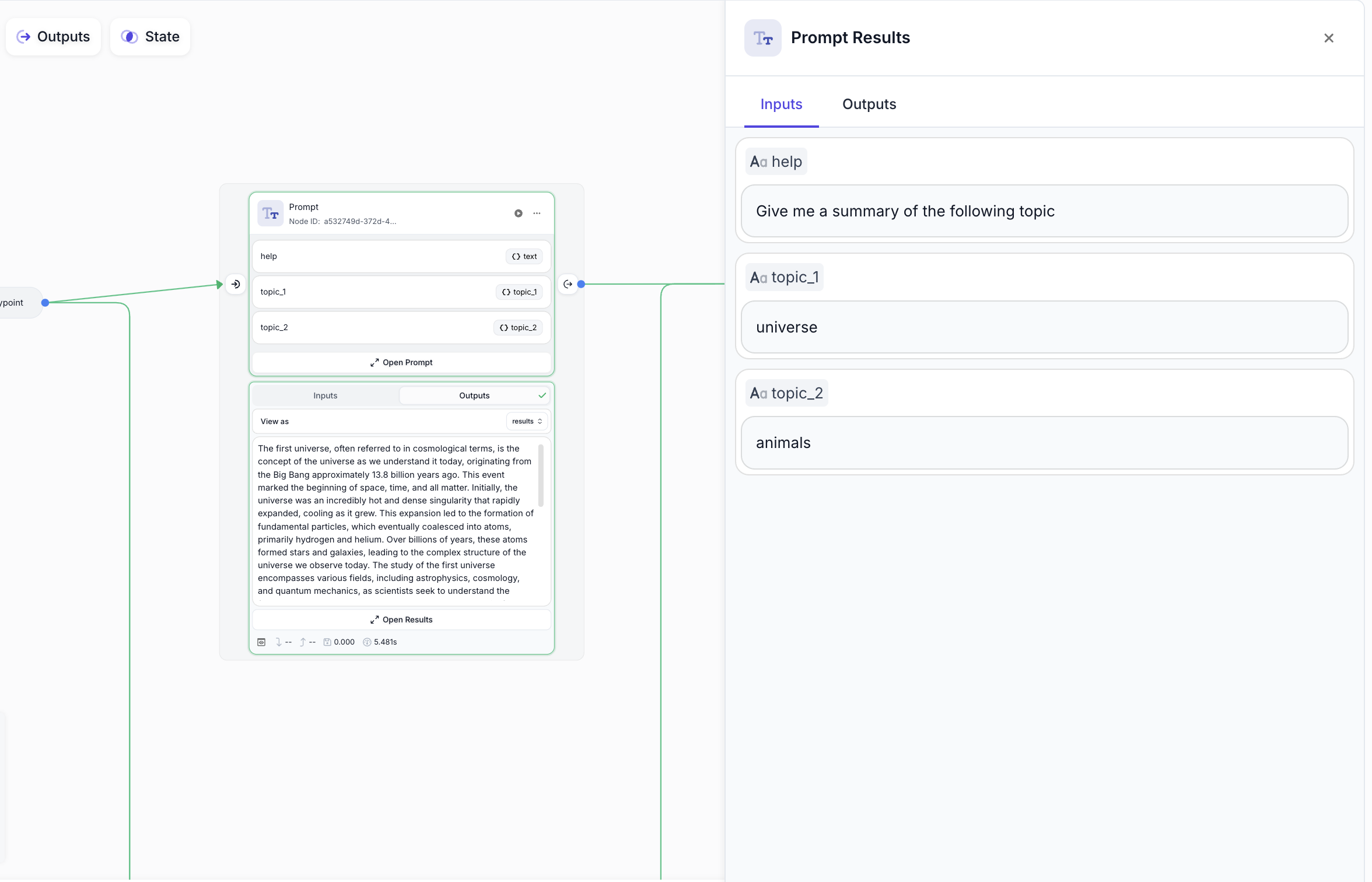

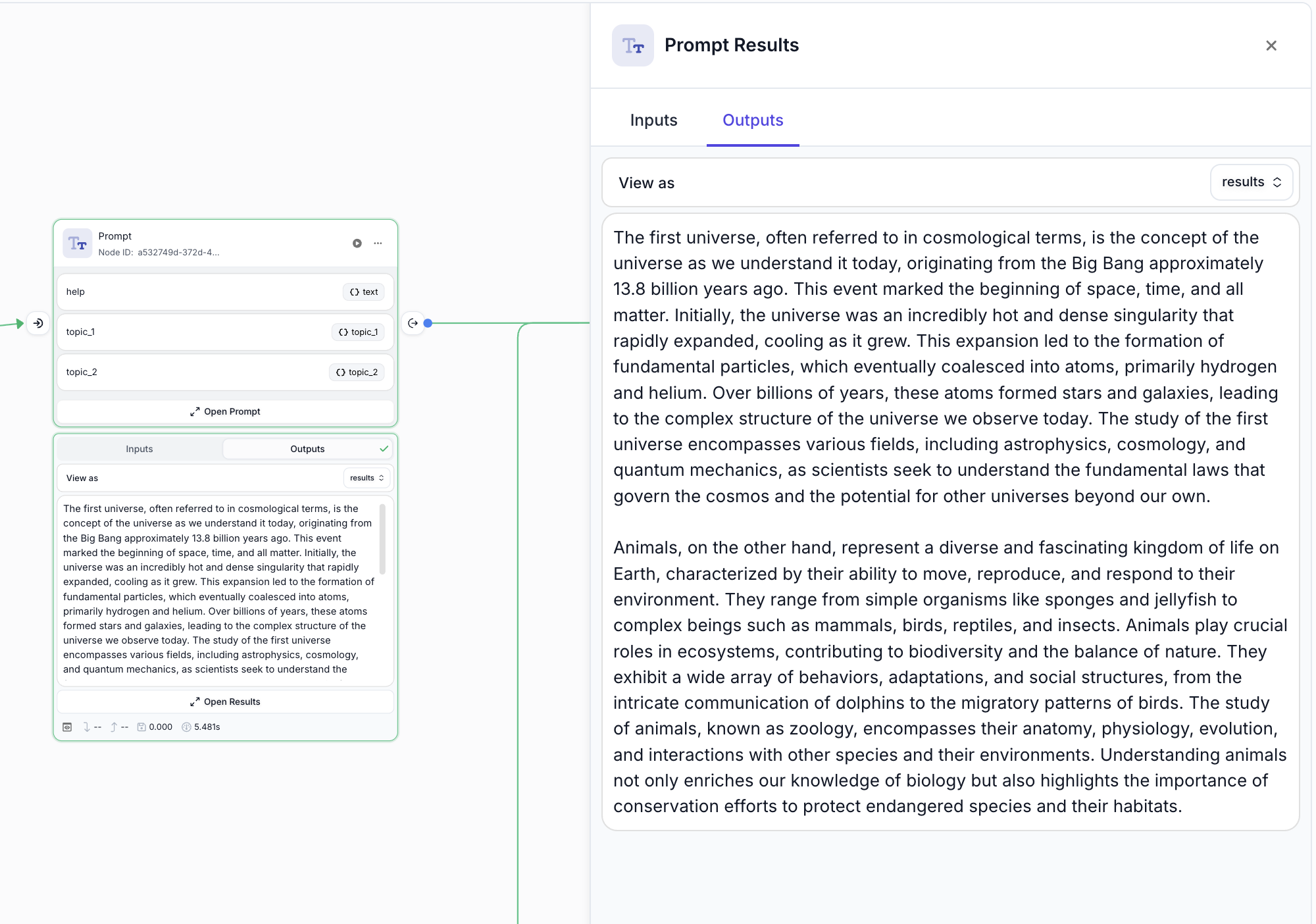

Node Results Side Panel

July 11th, 2025

You can now view the entirety of a Node’s results in a dedicated side panel by clicking the Open Results button. The Node Results panel provides more screen real estate to properly view the inputs and outputs for the recent execution, making it easier to inspect and debug your Workflow executions.

The side panel includes tabbed navigation between Inputs and Outputs, and provides a cleaner interface for reviewing execution results with better formatting and more space for complex data structures.

Gemini Embedding Model Support

July 6th, 2025

We’ve added support for the gemini-embedding-001 model hosted on Google Vertex AI to Vellum.

Editable Document Keywords

July 4th, 2025

You can now edit the keywords associated with a Document from the UI. This will trigger a re-indexing of the Document in all of its associated Document Indexes.

Workflow Level Packages

July 2nd, 2025

Previously, package dependencies for Code Execution Nodes needed to be defined on a node by node basis. You can now define package dependencies at the Workflow level through the Workflow Settings modal:

Aside from consolidating dependencies across nodes in a single, reusable Container Image, this comes with an additional performance benefit at runtime, usually saving anywhere between 2-10 seconds per Code Node execution. The Container Images generated from these settings are subject to the same limits defined here.

Private Package Repositories

July 1st, 2025

We’ve added the ability to use packages from private repositories when using Code Execution Nodes in Workflows and also in Code Metrics. Currently only Python and AWS CodeArtifact repositories are supported.

To add a private repository you can navigate to the Private Package Repository page here. You can also add a repository by clicking the “Add Private Repository button” in the dropdown of the new Repository field when adding a package.

Expression Input Output Type Improvements

July 1st, 2025

We’ve significantly enhanced the expression input dropdown to make it easier to understand and navigate Node output types in Workflows.

Explicit Output Type Display

The expression input dropdown now explicitly shows Node output types in a separate menu, making it much clearer what type of data you’re selecting when building expressions. This helps prevent type mismatches and makes workflow building more intuitive.

Structured Outputs Warning

When a Prompt Node has Structured Outputs enabled, the interface now provides clear warnings to help you select the appropriate JSON output. This prevents common configuration errors and guides you toward the correct output type for your use case.

Full Keyboard Accessibility

The new interface is fully keyboard accessible with intuitive navigation:

- Up and Down arrow keys: Navigate through the main menu items

- Left and Right arrow keys: Step in and out of submenus

- Enter: Select the highlighted item

- Escape: Close the dropdown or exit submenus

This update makes building complex Workflows more accessible and reduces the learning curve for new users while providing power users with faster, keyboard-driven navigation options.